Hmmm, you're right in that humans don't play randomly.

Its a very elegant use of neural nets to improve the MCTS template.

#Stockfish chess mcts manual

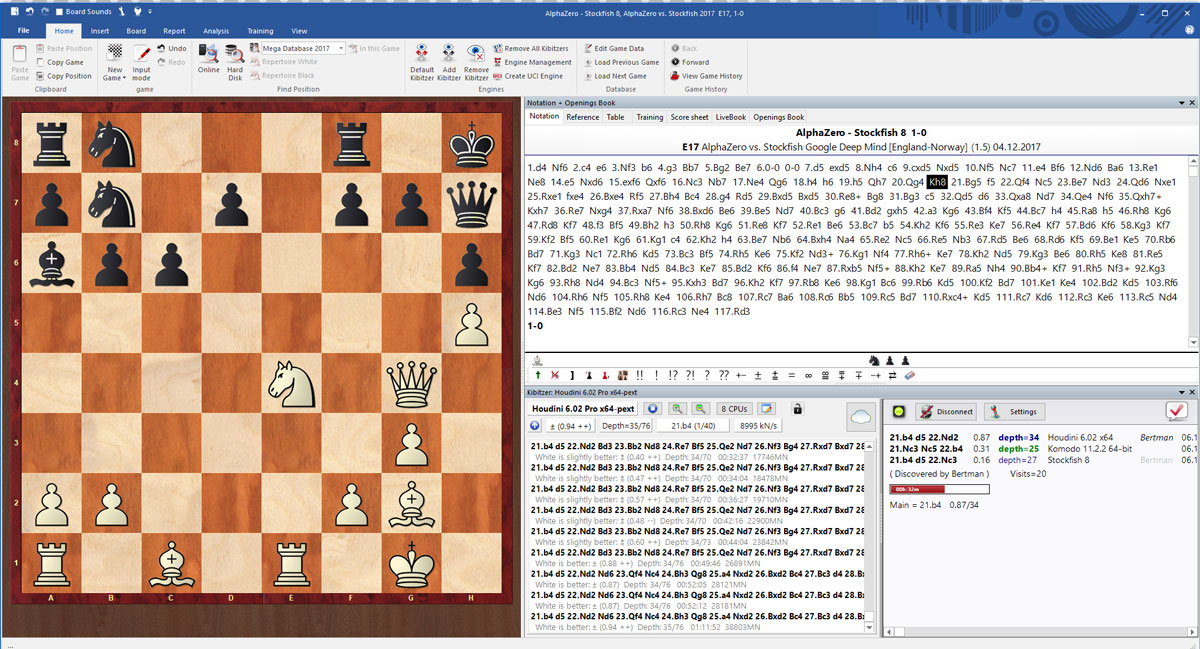

AlphaZero was interesting in that this manual tweaking of MCTS was left to the neural network training. Alpha-Beta search doesn't have any concept of this, and requires a Quiescence search engine "tacked on" for the feature. But its still very much a manually tweaked process: how much time to dedicate "exploring" bad moves, and how much time spent "exploiting" good moves (analyzing and making sure a "good" move is as good as you think it is). MCTS has a more elegant statistical theory behind it. The formula has some statistical guarantees about finding results that I never really understood, but hope to research and math-out one day. The precise formula involves a logarithm, division, and I think a square-root. Ex: every time you search a winning position 10,000 times, you want to search losing positions 100 times. The multiarmed bandit heuristic is to search "winning" positions more, but to "explore" losing positions less. Positions of "loss" or "draw" will be seen as a miss in the multi-armed bandit, but the "exploration" phase of the algorithm will randomly search a position anyway. Positions of "win" are seen as a "win" with a multi-armed bandit, and are explored further and analyzed further. It will NOT return until it gets that kind of evaluation. MCTS analyzes "deeper" positions, while Stockfish analyzes more exhaustively.Īnd again: classical MCTS (probably not AlphaZero) will keep going until it evaluates a position as "win", "loss", or "draw". MCTS on the other hand is almost ENTIRELY a quiescence search. Under certain conditions, Stockfish will search deeper (but not all conditions). I think my point is that Quiescence search is an explicitly programmed feature of Stockfish. Stockfish is also multithreaded and got a hash-table to remember previously searched positions (even positions from "another" branch of the tree). Stockfish uses iterated deepening as you've said, but that's very similar to Breadth-first search. Breadth-first search with Alpha-Beta pruning is how it is taught at universities.

Nonetheless, the classical chess search algorithm is exhaustive. For example, Stockfish may recognize that some moves are "forced" and will iterate a specific variation deeper. The pruning in Alpha-beta is very much a heuristic that is applied on top of the template. So MCTS just naturally works with neural nets very well. which btw, performs way better than you'd expect.) might be used to "bootstrap" the exploration weights. Its possible that the original randomized MCTS algorithm (search randomly. Most of the input layers / early hidden-layers can be "recycled" between the "explore" function and the "evaluation" function.ĮDIT: I don't know how AlphaZero gets its "exploration" network trained up however. It seems like a very good way to have a single neural network mostly perform double-duty (aside from the two output nodes). It uses a neural-net to find "interesting" positions to guide the search, and the same neural net also evaluates the position (who is winning or losing). MCTS implemented by AlphaZero is different however. Think of it as a random-sample of the win/loss potential of a move. The reasoning is simple: on the average, a lot of "samples" of playthroughs will lead to a better estimate for win/loss probability. In effect, its a depth-first search, you play until you make a conclusion (potentially a "bad" conclusion). Classically, the original MCTS algorithms for Go would play all the way to the end of a game before searching other parts of the tree. Its an exhaustive search, like a breadth-first search. Monte-Carlo seems more akin to how humans play games.Īlpha-Beta pruning is the classical computer science algorithm, easier to understand, describe, and analyze.

0 kommentar(er)

0 kommentar(er)